Reducing the play of chance using meta-analysis

Combining data from similar studies (meta-analysis) can help to provide statistically more reliable estimates of treatment effects.

Key Concepts addressed:Details

Combining data from similar studies (meta-analysis) can help to provide more reliable estimates of treatment effects.

Systematic reviews of all the relevant, reliable evidence are needed for fair tests of medical treatments. To avoid misleading conclusions about the effects of treatments, people preparing systematic reviews must take steps to avoid biases of various kinds, for example, by taking account of all the relevant evidence and by avoiding biased selection from the available evidence.

Even though care may be taken to minimize biases in reviews, misleading conclusions about the effects of treatments may also result from the play of chance. Discussing separate but similar studies one at a time in systematic reviews may also leave a confused impression because of the play of chance. If it is both possible and appropriate, this problem can be reduced by combining the data from all the relevant studies, using a statistical procedure now known as ‘meta-analysis’.

Most statistical techniques used today in meta-analysis derive from the work of the German mathematician Karl Gauss and the French mathematician Pierre-Simon Laplace during the first half of the 19th century. One of the fields in which their methods found practical application was astronomy: measuring the position of stars on a number of occasions often resulted in slightly different estimates, so techniques were needed to combine the estimates to produce an average derived from the pooled results. In 1861, the British Astronomer Royal, George Airy, published a ‘textbook’ for astronomers (Airy 1861) in which he described the methods used for this process of quantitative synthesis. Just over a century later, an American social scientist, Gene Glass, named the process ‘meta-analysis’ (Glass 1976).

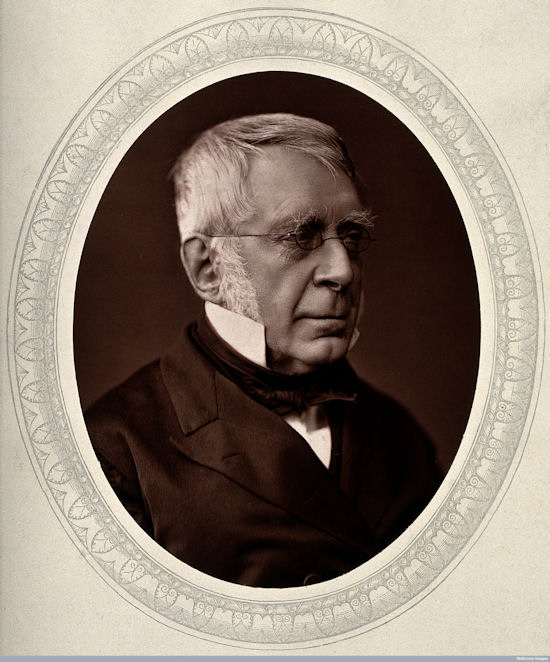

An early medical example of meta-analysis was published in the British Medical Journal in 1904 by Karl Pearson (Pearson 1904; O’Rourke 2006), who had been asked by the government to review evidence on the effects of a vaccine against typhoid. Although methods for meta-analysis were developed by statisticians over the subsequent 70 years, it was not until the 1970s that they began to be applied more widely, initially by social scientists (Glass 1976), and then by medical researchers (Stjernswärd 1974; Stjernsward et al. 1976; Cochran et al. 1977; Chalmers et al. 1977; Chalmers 1979; Editorial 1980).

Meta-analysis can be illustrated using the logo of The Cochrane Collaboration. The logo illustrates a meta-analysis of data from seven fair tests. Each horizontal line represents the results of one test (the shorter the line, the more certain the result); and the diamond represents their combined results. The vertical line indicates the position around which the horizontal lines would cluster if the two treatments compared in the trials had similar effects; if a horizontal line crosses the vertical line, it means that particular test found no clear (‘statistically significant’) difference between the treatments. When individual horizontal lines cross the vertical ‘no difference’ line, it suggests that the treatment might either increase or decrease infant deaths. Taken together, however, the horizontal lines tend to fall on the beneficial (left) side of the ‘no difference’ line. The diamond represents the combined results of these tests, generated using the statistical process of meta-analysis. The position of the diamond clearly to the left of the ‘no difference’ line indicates that the treatment is beneficial.

Meta-analysis can be illustrated using the logo of The Cochrane Collaboration. The logo illustrates a meta-analysis of data from seven fair tests. Each horizontal line represents the results of one test (the shorter the line, the more certain the result); and the diamond represents their combined results. The vertical line indicates the position around which the horizontal lines would cluster if the two treatments compared in the trials had similar effects; if a horizontal line crosses the vertical line, it means that particular test found no clear (‘statistically significant’) difference between the treatments. When individual horizontal lines cross the vertical ‘no difference’ line, it suggests that the treatment might either increase or decrease infant deaths. Taken together, however, the horizontal lines tend to fall on the beneficial (left) side of the ‘no difference’ line. The diamond represents the combined results of these tests, generated using the statistical process of meta-analysis. The position of the diamond clearly to the left of the ‘no difference’ line indicates that the treatment is beneficial.

This diagram shows the results of a systematic review of fair tests of a short, inexpensive course of a steroid drug given to women expected to give birth prematurely. The first of these tests was reported in 1972. The diagram summarises the evidence that would have been revealed had the available tests been reviewed systematically a decade later, in 1981: it indicates strongly that steroids reduce the risk of babies dying from the complications of immaturity. By 1991, seven more trials had been reported, and the picture in the logo had become still stronger.

No systematic review of these trials was published until 1989 (Crowley 1989; Crowley et al 1990), so most obstetricians, midwives, and pregnant women did not realise that the treatment was so effective. After all, some of the tests had not shown a ‘statistically significant’ benefit, and maybe only these tests had been noticed. Because no systematic reviews had been done, tens of thousands of premature babies suffered, and many died unnecessarily. This is just one of many examples of the human costs that can result from failure to assess the effects of treatments in systematic, up-to-date reviews of fair tests, using meta-analysis to reduce the likelihood that the play of chance will be misleading. Furthermore, resources were wasted on unnecessary intensive care and research.

No systematic review of these trials was published until 1989 (Crowley 1989; Crowley et al 1990), so most obstetricians, midwives, and pregnant women did not realise that the treatment was so effective. After all, some of the tests had not shown a ‘statistically significant’ benefit, and maybe only these tests had been noticed. Because no systematic reviews had been done, tens of thousands of premature babies suffered, and many died unnecessarily. This is just one of many examples of the human costs that can result from failure to assess the effects of treatments in systematic, up-to-date reviews of fair tests, using meta-analysis to reduce the likelihood that the play of chance will be misleading. Furthermore, resources were wasted on unnecessary intensive care and research.

By the end of the 20th century it had become widely accepted that meta-analysis was an important element of fair tests of treatments, and that it helped to avoid incorrect conclusions that treatments had no effects when they were, in fact, either useful or harmful.

The text in these essays may be copied and used for non-commercial purposes on condition that explicit acknowledgement is made to The James Lind Library (www.jameslindlibrary.org).

References

Airy GB (1861). On the algebraical and numerical theory of errors of observations and the combination of observations. London: Macmillan.

Chalmers I (1979). Randomized controlled trials of fetal monitoring 1973-1977. In: Thalhammer O, Baumgarten K, Pollak A, eds. Perinatal Medicine. Stuttgart: Georg Thieme, 260-265.

Chalmers TC, Matta RJ, Smith H, Kunzler A-M. (1977). Evidence favoring the use of anticoagulants in the hospital phase of acute myocardial infarction. New England Journal of Medicine 297:1091-1096.

Cochran WG, Diaconis P, Donner AP, Hoaglin DC, O’Connor NE, Peterson OL, Rosenoer VM (1977). Experiments in surgical treatments of duodenal ulcer. In: Bunker JP, Barnes BA, Mosteller F, eds. Costs, risks and benefits of surgery. Oxford: Oxford University Press, pp 176-197.

Crowley P (1989). Promoting pulmonary maturity. In: Chalmers I, Enkin M, Keirse MJNC, eds. Effective care in pregnancy and childbirth. Oxford: Oxford University Press, pp 746-762.

Crowley P, Chalmers I, Keirse MJNC (1990). The effects of corticosteroid administration before preterm delivery: an overview of the evidence from controlled trials. British Journal of Obstetrics and Gynaecology 97:11-25.

Editorial (1980). Aspirin after myocardialinfarction. Lancet 1:1172-3.

Glass GV (1976). Primary, secondary and meta-analysis of research. Educational Researcher 10, 3-8.

O’Rourke K (2006). An historical perspective on meta-anlysis: dealing quantatively with varying study results. The James Lind Library. http://www.jameslindlibrary.org/articles/a-historical-perspective-on-meta-analysis-dealing-quantitatively-with-varying-study-results/

Pearson K (1904). Report on certain enteric fever inoculation statistics. BMJ 3:1243-1246.

Stjernswärd J (1974). Decreased survival related to irradiation postoperatively in early operable breast cancer. Lancet 2:1285-1286.

Stjernswärd J, Muenz LR, von Essen CF (1976). Postoperative radiotherapy and breast cancer. Lancet 1:749.

Read more about the evolution of fair comparisons in the James Lind Library.